向量數據庫的勁敵來了?又有一批賽道創業公司要倒下?

……

這是 OpenAI 上線 Assistant 檢索功能后,技術圈傳出的部分聲音。原因在于,此功能可以為用戶提供基于知識庫問答的 RAG(檢索增強增強) 能力。而此前,大家更傾向于將向量數據庫作為 RAG 方案的重要組件,以達到減少大模型出現“幻覺”的效果。

那么,問題來了,OpenAI 自帶的 Assistant 檢索功能 V.S. 基于向量數據庫構建的開源 RAG 方案相比,誰更勝一籌?

本著嚴謹的求證精神,我們對這個問題進行了定量測評,結果很有意思:OpenAI 真的很強!

不過,在基于向量數據庫的開源 RAG 方案面前就有些遜色了!

接下來,我將還原整個測評過程。需要強調的是,要完成這些測評并不容易,少量的測試樣本根本無法有效衡量 RAG 應用的各方面效果。

因此,需要采用一個公平、客觀的 RAG 效果測評工具,在一個合適的數據集上進行測評,進行定量的評估和分析,并保證結果的可復現性。

話不多說,上過程!

一、評測工具

Ragas (https://docs.ragas.io/en/latest/)是一個致力于測評 RAG 應用效果的開源框架。用戶只需要提供 RAG 過程中的部分信息,如 question、 contexts、 answer 等,它就能使用這些信息來定量評估多個指標。通過 pip 安裝 Ragas,只需幾行代碼,即可進行評估,過程如下:

Python

from ragas import evaluate

from datasets import Dataset

# prepare your huggingface dataset in the format

# dataset = Dataset({

# features: ['question', 'contexts', 'answer', 'ground_truths'],

# num_rows: 25

# })

results = evaluate(dataset)

# {'ragas_score': 0.860, 'context_precision': 0.817,

# 'faithfulness': 0.892, 'answer_relevancy': 0.874Ragas 有許多評測的得分指標子類別,比如:

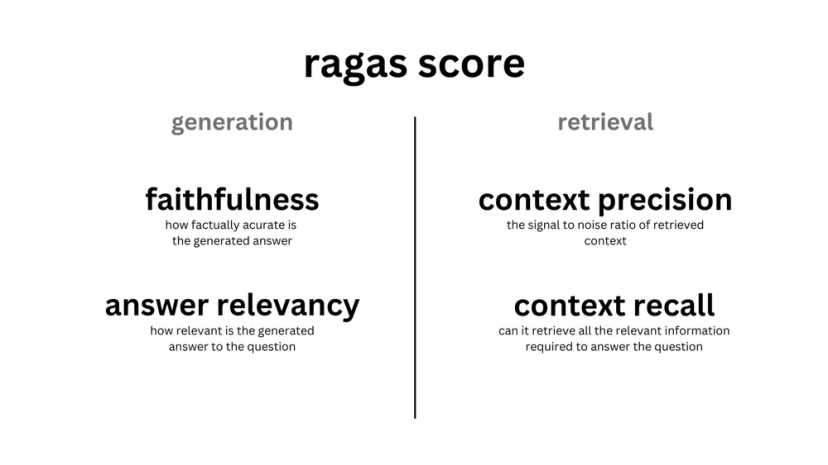

?從 generation 角度出發,有描述回答可信度的 Faithfulness,回答和問題相關度的 Answer relevancy

?從 retrieval 角度出發,有衡量知識召回精度的 Context precision,知識召回率的 Context recall,召回內容相關性的 Context Relevancy

?從 answer 與 ground truth 比較角度出發,有描述回答相關性的 Answer semantic similarity,回答正確性的 Answer Correctness

?從 answer 本身出發,有各種 Aspect Critique

圖片

圖片

圖片來源:https://docs.ragas.io/en/latest/concepts/metrics/index.html

這些指標各自衡量的角度不同,舉個例子,比如指標 answer correctness,它是結果導向,直接衡量 RAG 應用回答的正確性。下面是一個 answer correctness 高分與低分的對比例子:

Plain Text

Ground truth: Einstein was born in 1879 at Germany .

High answer correctness: In 1879, in Germany, Einstein was born.

Low answer correctness: In Spain, Einstein was born in 1879.其它指標細節可參考官方文檔:

(https://docs.ragas.io/en/latest/concepts/metrics/index.html)。

重要的是,每個指標衡量角度不同,這樣用戶就可以全方位,多角度地評估 RAG 應用的好壞。

二、測評數據集

我們使用 Financial Opinion Mining and Question Answering (fiqa) Dataset (https://sites.google.com/view/fiqa/)作為測試數據集。主要有以下幾方面的原因:

?該數據集是屬于金融專業領域的數據集,它的語料來源非常多樣化,并包含了人工回答內容。里面涵蓋非常冷門的金融專業知識,大概率不會出現在 GPT 的訓練數據集。這樣就比較適合用來當作外掛的知識庫,以和沒見過這些知識的 LLM 形成對比。

?該數據集原本就是用來評估 Information Retrieval (IR) 能力的,因此它有標注好了的知識片段,這些片段可以直接當做召回的標準答案(ground truth)。

?Ragas 官方也把它視作一個標準的入門測試數據集(https://docs.ragas.io/en/latest/getstarted/evaluation.html#the-data),并提供了構建它的腳本(https://github.com/explodinggradients/ragas/blob/main/experiments/baselines/fiqa/dataset-exploration-and-baseline.ipynb)。因此有一定的社區基礎,可以得到一致的認可,也比較合適用來做 baseline。

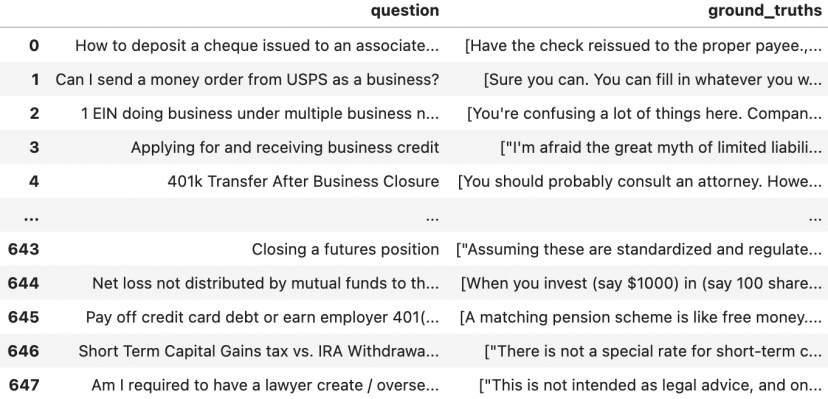

我們先使用轉換腳本來將最原始的 fiqa 數據集轉換構建成 Ragas 方便處理的格式。可以先看一眼該評測數據集的內容,它有 647 個金融相關的 query 問題,每個問題對應的知識原文內容列表就是 ground_truths,它一般包含 1 到 4 條知識內容片段。

fiqa數據集示例

fiqa數據集示例

進行到這一步,測試數據就準備好了。我們只需要將 question 這一列,拿去提問 RAG 應用,然后將 RAG 應用的回答和召回,合并上 ground truths,將所有這些信息,用 Ragas 評測打分。

三、RAG對照設置

接下來就是搭建我們要對比的兩個 RAG 應用,來對比跑分。下面開始搭建兩套 RAG 應用:OpenAI assistant 和基于向量數據庫自定義的 RAG pipeline。

1.OpenAI assistant

我們采用 OpenAI 官方的 assistant retrieval 方式介紹(https://platform.openai.com/docs/assistants/tools/knowledge-retrieval),構建 assistant 和上傳知識,并且使用 OpenAI 官方給出的方式(https://platform.openai.com/docs/assistants/how-it-works/message-annotations)拿到 answer 和召回的 contexts,其它都采用默認設置。

2.基于向量數據庫的 RAG pipeline

緊接著我們打造一條基于向量召回的 RAG pipeline。用 Milvus (https://milvus.io/)向量數據庫存儲知識,用 HuggingFaceEmbeddings (https://python.langchain.com/docs/integrations/platforms/huggingface)中的 BAAI/bge-base-en 模型構建 embedding用 LangChain (https://python.langchain.com/docs/get_started/introduction)的組件進行文檔導入和 Agent 構建。

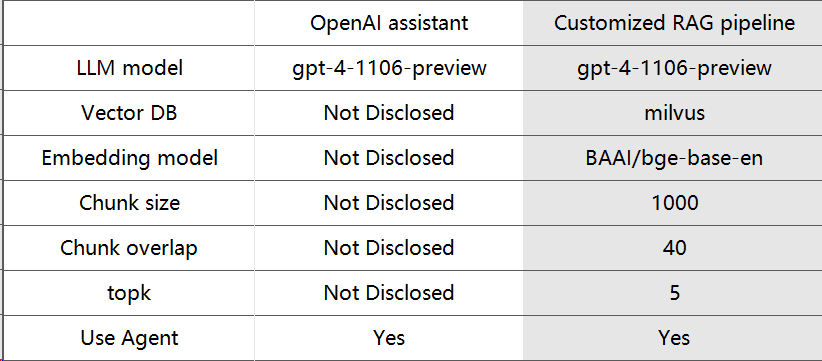

下面列出了兩套方案的對比:

這里注意到,我們用的 LLM model 都是 gpt-4-1106-preview,其它的策略由于 OpenAI 是閉源的,所以應該和它有許多差別。篇幅所限具體實現細節在此不作展開,可以參考我們的實現代碼(https://github.com/milvus-io/bootcamp/tree/master/evaluation)。

四、結果和分析

1.實驗結果

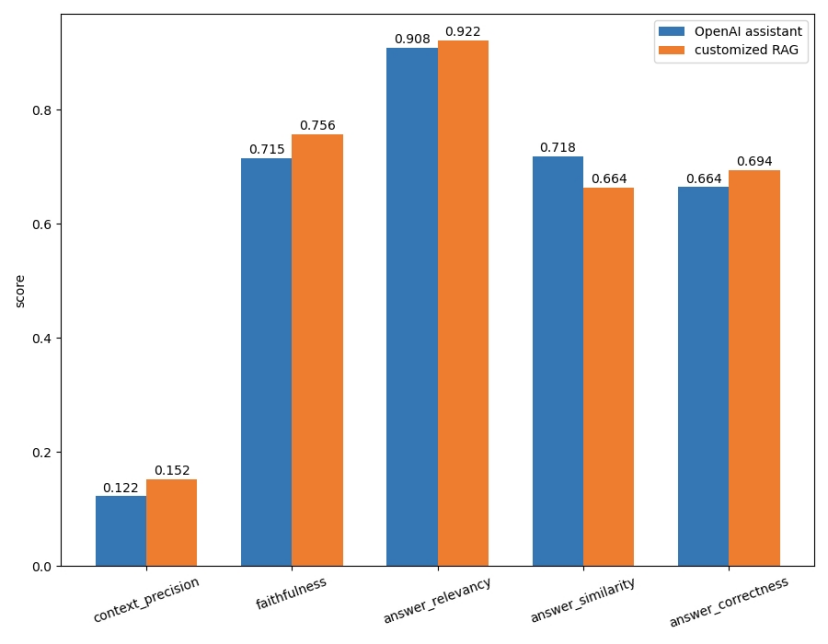

我們使用 Ragas 里的多個指標對它們進行打分,得到下面每個指標的對比結果:

各項指標對比

各項指標對比

可以看到,在我們統計的5項指標中,OpenAI assistant 除了在 answer_similarity 這項超過自定義的 RAG pipeline 之外,其它指標都略低于自定義的 RAG pipeline。

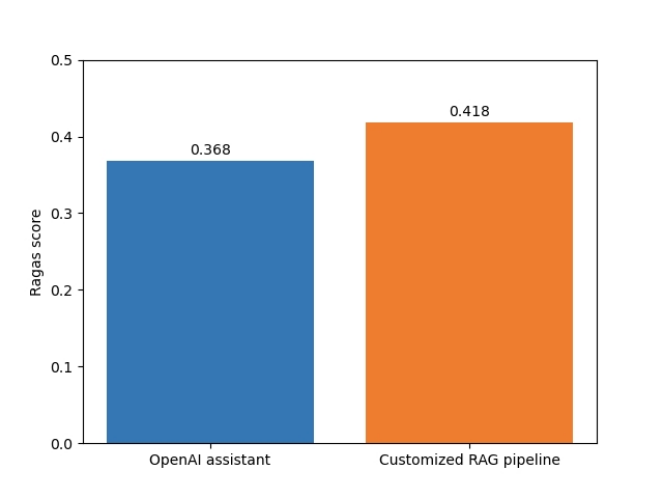

另外,Ragas 也可以通過計算各項指標的調和平均數,來得到一個總體平均的得分,叫作 Ragas score。調和平均數的作用在于懲罰低分項。從總體上看,OpenAI assistant 的 Ragas score 也是低于自定義的 RAG pipeline。

Ragas score對比

Ragas score對比

那么,為什么會有這樣的結果呢?通過大量的單條結果對比,我們發現,基于向量數據庫搭建的 RAG pipeline 與OpenAI assistant 相比,有如下優勢:

1) OpenAI assistant 更傾向于用自己的知識回答,而少用召回的知識。

這可能是由于其內部的 Agent 設定導致,當遇到 GPT 可能知道的事實時,它更自信,選擇使用自己訓練時的知識,而不去使用召回的知識,但也許這時的知識就是正好和它訓練的相悖。舉個例子:

提問:

Plain Text

Are personal finance / money management classes taught in high school, anywhere?OpenAI assistant 回答:

Plain Text

Personal finance and money management classes are indeed taught in some high schools, although the availability and requirement of such classes can vary greatly depending on the country and even within regions of a country. In the United States, for example, the Council for Economic Education (CEE) conducts surveys and reports on the state of financial education in K-12 schools. Their findings suggest that as of my last update, a growing number of states have integrated personal finance into their educational standards, and some have mandated that students take a personal finance course in order to graduate.

Although I don't have access to a live database or the most recent studies, you can often find information about financial education in schools by:

1. Checking government education websites.

2. Looking at reports from financial education nonprofits and councils.

3. Contacting local school boards or departments of education.

If you are interested in specific locations or have a particular focus in mind, you could specify that so I could provide more directed information, if available.這個回答對不對呢?只從回答來看是沒什么問題,但我們注意到標亮的地方,強調的是其沒有聯網獲取最新知識,這就說明它沒有用到上傳上去的知識文檔內容。

再看看 ground truth,也就是數據集里標注的正確答案:

Plain Text

["In Houston, Texas USA where I went to a private high school they had a half-semester class in personal finance, but it was optional and didn't give you any credits towards graduation. You are right though, it should be a standard class. After all, who doesn't need that information in their adult lives, and not everyone goes to college."

...

'"We had a ""civics"" class when I was a freshman in high school. This was in the Ann Arbor, MI public schools. It covered the very basics (how to balance your checkbook, what are stocks, how do income taxes work, what is interest, etc.) of money management along with an overview of politics and the legal system. It was a really light class, though, and didn\'t go deeply into personal finance and money management. I agree that such a class would be very valuable, as would cooking, nutrition, and basic home and car repair."']可以看到,文檔里舉例了許多回答這個問題的具體場景,而 OpenAI 的回答確實沒有用到它們。它認為這個問題過于簡單,足以直接回答,而忽略了知識庫里可能需要的信息。

接下來我們看看基于向量數據庫的 RAG 的回答:

Plain Text

Personal finance and money management classes are indeed taught in some high schools, but the availability and requirement of such classes can vary greatly. For example, in Houston, Texas, a private high school offered an optional half-semester class in personal finance that did not provide credits towards graduation. Staples High School in Westport, Connecticut, introduced a personal financial management class focused on using mathematics to develop financial literacy skills, covering topics such as earnings, banking, credit cards, loans, taxes, insurance, investing, budgeting, and buying personal property. In Ann Arbor, Michigan, public schools offered a 'civics' class that included basic money management topics. Additionally, in the UK, the 'Every Child Matters' framework includes 'achieving economic well-being' as an important element, and some schools use a School Rewards System to teach kids about finance and economy. While these examples show that such classes exist, they are not universally required or standardized, and the depth and focus of the curriculum can vary.高亮出了 RAG 回答中引用 ground truth 的內容,它很好地把知識內容融入到回答中去,這才是用戶需要的。

2.)OpenAI 對知識的切分和召回有待優化,開源自定義方案更勝一籌

我們可以通過查看 assistant 的中間召回的知識,來分析它對知識文檔的切分策略,或者反推其 embedding 模型的效果。舉個例子:

提問:

JSON

Pros / cons of being more involved with IRA investments [duplicate]OpenAI assistant 的中間召回片段:

JSON

['PROS: CONS']這顯然是一個錯誤的召回片段,而且它只召回了這一條片段。首先片段的切分不太合理,把后面的內容切掉了。其次 embedding 模型并沒有把更重要的、可以回答這個問題的片段召回,只是召回了提問詞相似的片段。

自定義 RAG pipeline 的召回片段:

Plain Text

['in the tax rate, there\'s also a significant difference in the amount being taxed. Thus, withdrawing from IRA is generally not a good idea, and you will never be better off with withdrawing from IRA than with cashing out taxable investments (from tax perspective). That\'s by design."'

"Sounds like a bad idea. The IRA is built on the power of compounding. Removing contributions will hurt your retirement savings, and you will never be able to make that up. Instead, consider tax-free investments. State bonds, Federal bonds, municipal bonds, etc. For example, I invest in California muni bonds fund which gives me ~3-4% annual dividend income - completely tax free. In addition - there's capital appreciation of your fund holdings. There are risks, of course, for example rate changes will affect yields and capital appreciation, so consult with someone knowledgeable in this area (or ask another question here, for the basics). This will give you the same result as you're expecting from your Roth IRA trick, without damaging your retirement savings potential."

"In addition to George Marian's excellent advice, I'll add that if you're hitting the limits on IRA contributions, then you'd go back to your 401(k). So, put enough into your 401(k) to get the match, then max out IRA contributions to give you access to more and better investment options, then go back to your 401(k) until you top that out as well, assuming you have that much available to invest for retirement."

"While tax deferral is a nice feature, the 401k is not the Holy Grail. I've seen plenty of 401k's where the investment options are horrible: sub-par performance, high fees, limited options. That's great that you've maxed out your Roth IRA. I commend you for that. As long as the investment options in your 401k are good, then I would stick with it."

"retirement plans which offer them good cheap index funds. These people probably don't need to worry quite as much. Finally, having two accounts is more complicated. Please contact someone who knows more about taxes than I am to figure out what limitations apply for contributing to both IRAs and 401(k)s in the same year."]可以看到,自行搭建的 RAG pipeline 把許多 IRA 投資的信息片段都召回出來了,這些內容也是有效地結合到最后 LLM 的回答中去。

此外,可以注意到,向量召回也有類似 BM25 這種分詞召回的效果,召回的關鍵詞確實都是需要的詞“IRA”,因此向量召回不僅在整體語義上有效,在微觀詞匯上召回效果也不遜色于詞頻召回。

2.其它方面

除實驗效果分析之外,對比更加靈活的自定義開源 RAG 方案,OpenAI assistant 還有一些較為明顯的劣勢:

?OpenAI assistant 無法調整RAG流程中的參數,內部是個黑盒,這也導致了沒法對其優化。而自定義 RAG 方案可以調整 top_k、chunk size、embedding 模型等組件或參數,這樣也可以在特定數據上進行優化。

?OpenAI 存儲文件量有限,而向量數據庫可以存儲海量知識。OpenAI 單文件上傳有上限 512 MB 并不能超過 2,000,000 個 token。

因此,OpenAI 無法完成業務更復雜,數據量更大或更加定制化的 RAG 服務。

五、總結

我們基于 Ragas 測評工具,將 OpenAI assistant 和基于向量數據庫的開源 RAG 方案做了詳盡的比較和分析。可以發現,雖然 OpenAI assistant 的確在檢索方面表現尚佳,但在回答效果,召回表現等方面卻遜色于向量 RAG 檢索方案,Ragas 的各項指標也定量地反應出該結論。

因此,對于構建更加強大、效果更好的 RAG 應用,開發者可以考慮基于 Milvus(https://zilliz.com/what-is-milvus) 或 Zilliz Cloud(https://cloud.zilliz.com.cn/signup) 等向量數據庫,構建定義檢索功能,從而帶來更好的效果和靈活的選擇。