ByteDance Data Platform: ClickHouse-based Complex Query Implementation and Optimization

原創(chuàng) 精選In today's market, ClickHouse is one of the most popular column-oriented database management systems (DBMS). A rising star in the field, ClickHouse has led a new wave of analytical databases in the industry with its impressive performance advantages, and it has a much faster query speed than most other database management systems of the same type.

While ClickHouse can manage large volumes of enterprise business data, it is susceptible to query exception problems in complex queries, which may adversely impact the regular operation of the business.

In this article, we invited Mr. Dong Yifeng, a senior research and development engineer of ByteDance, to introduce how his team solves ClickHouse's complex queries. As the largest user of ClickHouse in China, ByteDance has acquired significant technical experience in this versatile database management system.

Project background

ClickHouse's execution model is similar to that of big data engines such as Druid and ES, and its basic query model can be divided into two phases. To begin with, the Coordinator sends the query to the corresponding Worker node. Once the Worker nodes have completed their computations, the Coordinator aggregates, deals with the data received from the Worker nodes, and returns the results.

A two-stage execution model is better suited for many common business scenarios today, such as large wide-table queries, where ClickHouse excels. ClickHouse offers simplicity and efficiency; in most cases, simplicity means efficiency. However, as the enterprise business grows, increasingly complex business scenarios present ClickHouse with three different types of challenges.

- Challenge No.1:

When a higher volume of data is returned, the processing is more complicated. Coordinator will be put under greater pressure and can quickly become a bottleneck for the Query. If, for example, some agg operators for recomputation, such as Count Distinct, utilize hash tables for de-duplication, the second stage must merge the hash tables of each Worker on the Coordinator's host. It will be very time-consuming to perform this computation, and it cannot be parallelized.

- Challenge No.2:

Because the current ClickHouse model does not support Shuffle, the right table must contain all the data. A regular or global join, when a right table contains a large amount of data that is stored in memory, OOM may occurs. In the event that the data is spilled to disk, although the memory problem can be resolved, the query performance will be adversely affected due to the cost of disk IO and serialization/deserialization. It should be noted that when Join uses Hash Join if the right table is a large one, it will also take longer to complete the build. Therefore, the community has recently optimized the parallel building of some right tables, and the data is split according to the Join key to building multiple hash tables simultaneously. However, a further Split operation is required for the left and right tables.

- Challenge No.3:

The issue is complex queries (multi-table Join, nested subqueries, window function, etc.). ClickHouse does not support such scenarios well because it does not enhance execution parallelism by Shuffle to spread the data, and its generated pipeline may not be fully parallel. Therefore, in some scenarios, the cluster's full capabilities cannot be utilized.

With the increasing complexity of enterprise business, the need for complex queries, especially with multiple rounds of distributed Joins with many agg computations, will become stronger. It should be noted that it is not necessary in this case for all Queries to follow the pattern that ClickHouse offers, namely, generating large wide tables through an upstream data ETL process. It is more costly to perform the ETL process and may result in some data redundancy.

In this case, since enterprise cluster resources are limited, we hope to fully use machine resources to handle increasingly complex business scenarios and SQL queries. Our objective is to be able to support complex queries efficiently using ClickHouse.

Solutions

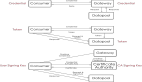

We have adopted a multi-stage execution approach for ClickHouse to replace its existing two-stage execution for complex queries. Like other distributed database engines, such as Presto, a complex query is divided into multiple Stages based on data exchanges. The exchanges are used to exchange data between the Stages. There are three primary forms of data exchange between the Stages.

Shuffle based on single or multiple keys

"Gather" is the process of gathering data from one or more nodes to a single node.

"Broadcast" is the process of copying the same data to multiple nodes.

When executing a single Stage, continue to reuse the current underlying execution of ClickHouse. Development is divided into different modules based on the function. Each module predetermined interfaces to reduce the dependencies and coupling between them. It will not affect other modules if the module or its internal logic is changed. It should be noted that the plug-in architecture provides flexible configurations for models, which allows for a variety of policies to be implemented according to different business scenarios.

Upon accepting a complex query, the Coordinator inserts an ExchangeNode and generates a distributed plan based on the current syntax tree, node type, and data distribution. Afterward, the Coordinator node will split plans by ExchangeNode type, creating execution plans for each Stage.

After that, the Coordinator node calls the SegmentScheduler to send the PlanSegment of each Stage to the Workers. Upon receiving the PlanSegment, the InterpreterPlanSegment completes the reading and executing of the data, and the data interaction is completed through the ExchangeManager. Finally, the Coordinator retrieves the data from the ExchangeManager corresponding to the last round of Stage and returns it to the Client.

SegmentScheduler schedules different PlanSegments. Based on specific scheduling policies, upstream and downstream dependencies, data distribution, Stage parallelism, and workstation distribution and status, PlanSegment will be sent to different Worker nodes.

Our current distribution and scheduling strategies are based on two main strategies.

First, dependency scheduling defines the topology based on Stage dependencies, generates a DAG graph, and schedules Stages following the DAG graph. For a two-table Stage, the left/right table reading Stage will be scheduled first, then the Join Stage, as the Join Stage depends on the former.

The second strategy is the AllAtOnce approach, which calculates the information about each Stage before scheduling them all simultaneously.

These two strategies differ in fault tolerance, resource use, and latency. The first strategy relies on scheduling, which provides better fault tolerance. The ClickHouse data can be replicated multiple times. Therefore, if some nodes are having difficulty connecting, you will be able to try these replication nodes. In the case of subsequent dependent nodes, there is no need to perceive the previous Stages being executed.

Non-Source Stage is not dependent on data, so it is more fault-tolerant, provided the nodes that ensure Stage parallelism remains operational. In extreme cases, it may be necessary to reduce the Stage's parallelism to ensure the Query's normal execution. The scheduling is, however, dependent and cannot be completely parallel, thus increasing the scheduling time. With more Stages, the scheduling delay may take up a significant percentage of the overall SQL. This can be resolved by optimizing: For some without dependencies, parallelism can be enabled to the maximum extent possible. Certain nodes within the same Stage can be parallelized, as well as the Stage without dependencies.

The AllAtOnce strategy can significantly reduce the scheduling delay by parallelism. To prevent a large number of network IO threads, the number of threads can be controlled by means of asynchronization. But the disadvantage is that it is not as fault-tolerant as dependency scheduling, where the Workers of each Stage are determined before scheduling, and the entire Query will fail if one Worker has a connection exception during scheduling.

In another case, the process takes longer if a Stage is scheduled before the upstream data is ready. For example, in the case of Final's agg Stage, the data is unavailable until Partial agg is completed. Even though we have made some optimizations to free up no-load CPU resources, it consumes some resources and requires threads to execute.

ClickHouse query node execution is mainly in the form of SQL interacting with each other between nodes. After splitting the Stage, we need specific support execution plans that can execute a separate PlanSegment. Therefore, the central role of InterpreterPlanSegment is to accept a serialized PlanSegment and be able to run the entire PlanSegment logic on Worker nodes.

In addition, ByteDance has also made functional and performance enhancements, such as supporting a single Stage to handle multiple Joins, so that the number of Stages and some unnecessary transfers can be reduced, and the entire Join process can be completed with one single Stage. If an exception occurs, the exception information will be reported to the query segment scheduler. The scheduler will cancel the execution of the Workers of other Stages from the Query.

ExchangeManager is the medium for PlanSegment data exchange, which can balance the ability of upstream and downstream data processing. Overall, our design uses Push with queuing to actively push to the downstream when the upstream data is ready, and supports the ability of backpressure on top of that.

Throughout the process, both the upstream and the downstream will optimize sending and reading through queues. Meanwhile, upstream and downstream will have their own queues. When the upstream processing speed is fast, the downstream processing speed is slower, as a result, the downstream cannot handle the upstream processing speed, and backpressure will control the upstream execution speed.

Since push and queuing are used, a relatively unique situation needs to be considered: in some cases, the downstream stage does not have to read all the upstream data. In the case of Limit100, the downstream only needs to read 100 pieces of data, while the upstream may generate a large amount of data. Therefore, when the downstream Stage has read enough data, it should be able to cancel the execution of the upstream Stage and empty the queue.

ExchangeManager uses more optimization points, such as fine-grained memory control, allowing memory to be controlled according to multiple levels, such as instance, query, segment, etc., to avoid OOM. Moreover, there are longer-term considerations in scenarios with low latency requirements and large data volumes. The first objective is to reduce memory usage by spilling data into disks.

The second is to Merge for small data and Split for large data to improve transfer efficiency. For example, in the Sort scenario, the network transmission process of Partial Sort and Merge Sort must be guaranteed to be orderly. The transmitted data cannot be disordered, or Merge Sort will not be performed properly, and the results will be affected.

The third aspect is the reuse of connections and the Optimization of the network. For example, when the upstream and downstream are in the same node, it should go to the memory exchange as far as possible, but not the network. As a result, network overhead and data serialization/deserialization costs are reduced. In addition, ClickHouse performs very effective Optimization on computings, which may cause its memory bandwidth to be a bottleneck in some instances. For specific ExchangeManager scenarios, zero-copy and other optimizations may be used to reduce memory copies.

The fourth step is to handle and monitor exceptions. Compared to a single host, the abnormalities in distributed cases can be more complex and more difficult to perceive. By retrying the query, some nodes with short-term high load or exceptions can be avoided from affecting the query. Having proper monitoring will allow you to detect problems and correct them quickly. It will also allow you to perform more targeted optimization.

Optimization and diagnosis

One of these is the multiple implementations and optimizations of Join. Depending on the size and distribution of the data, you can choose the appropriate implementation of Join.

Currently, the most commonly used method is the Shuffle Join.

Broadcast Join, a scenario of a large table Join a small table, will broadcast the right table to all Worker nodes on the left table, which can avoid the transfer of data from the large left table.

When the left and right tables have been distributed according to the Join key and are connected, there is no need to exchange data, which means that data transfer can be reduced to a minimum.

The essence of network connection optimization is to reduce the establishment and use of connections. Especially when the data needs to Shuffle, each node in the next round of Stage has to pull data from each node in the upstream Stage. Clusters with a high number of nodes and complex queries will establish a large number of connections.

At present, ByteDance's ClickHouse cluster is huge. With ClickHouse's two-stage execution, the maximum number of connections that can be established on a single host is tens of thousands due to its high concurrent capacity. Therefore, it is necessary to perform Optimization of network connections, in particular, to support the reuse of connections, where multiple Stage queries can run on each connection. By reusing connections as much as possible, a fixed number of connections can be established between different nodes, which are reused by different Query and Stage, and the number of connections does not grow as the size of Query and Stage grows.

Network transfer optimization is another aspect. In a data center, remote direct memory access is usually referred to as RDMA, a technology that can go beyond the kernel of the remote host operating system to access data in memory. Since this technique does not require a connection to the operating system, it not only saves a large number of CPU resources but likewise increases system throughput and reduces network communication latency, which is especially suitable for massively parallel computer clusters.

The ClickHouse platform does a significant amount of Optimization at the computational level, and the network bandwidth is generally smaller than the memory bandwidth. However, in some scenarios where the amount of data is considerable, the network transfer can become a bottleneck. To improve network transmission efficiency and data exchange throughput, you can introduce compression to reduce the amount of transmitted data and use RDMA to reduce some overhead. The results of testing indicate that there are significant gains in some scenarios with high data transfer volumes.

Many other databases also use Runtime Filter for Optimization, and the Join operator is usually the most time-consuming operator in the OLAP engine. Two methods can be used to optimize it. A possible solution would be to improve the performance of the Join operator. For example, for HashJoin, you can optimize the implementation of HashTable; or you can implement a better hashing algorithm, including parallelization.

Furthermore, suppose the operator itself is time-consuming and heavyweight. In that case, the data that the operator processes can be reduced. Then the Runtime Filter is more appropriate in some cases, especially where the fact table joins multiple dimension tables. A fact table in these scenarios is typically massive, and most filtering conditions are set on top of the dimension tables.

By filtering out input data that will not meet the Join conditions in advance at the Probe side of the Join, Runtime Filter significantly reduces data transfer and computation in Join. This will reduce the overall execution time. Due to this, we also support Runtime Filter on complex queries, and currently, we mainly support Min, Max, and Bloom Filters.

If the runtime filter's column (Join column) is built with an index (primary key, skip index...), it is necessary to regenerate the pipeline. Since the hit index may reduce the data read, the parallelism of the pipeline and the range of processing of the related data may be changed as a result. Suppose the columns of the runtime filter are not related to the index. In that case, you can pre-generate the filter conditions when the plan is generated, which is empty at the beginning and only a placeholder, and change the placeholder information to the actual filter conditions when the runtime filter is sent. Thus, even if the runtime filter timeout is issued and the query segment has already begun to execute, the subsequent data may still be filtered as long as the execution has not ended.

However, it should be noted that Runtime Filter is a unique optimization that applies to instances where the right table data volume is not large, and Runtime Filter has a better filtering effect on the left table. Generally, suppose the data volume of the right table is large, then the Runtime Filter building will take longer or will not affect the data filtering in the left table, increasing query time and overhead. Hence, it is crucial to determine whether to turn on Optimization based on the characteristics and size of your data.

Performance diagnosis and analysis are critical for complex queries. The pattern of SQL execution becomes complex due to introducing a multi-stage model for complex queries. Optimization begins with improving all sorts of Metrics, including Query execution time, the execution time of different Stages, start time, end time, amount of IO data to process, data processed by operators, execution status, as well as some Profile Events (such as Runtime Filter's build time, data volume to filter, and other Metrics).

Second, we record the backpressure information and the upstream and downstream queue lengths to infer the execution and bottlenecks of the Stage.

It can be generally assumed that:

When the number of input and output queues are both low or high, it indicates that the current stage is processing normally or is being backpressure downstream. Backpressure information can be used to determine the cause further.

When the number of input and output queues are not the same, this may be an intermediate state of backpressure transmission, or the stage itself is the source of backpressure.

Stages with large output queues that are often backpressure are usually affected by downstream stages. Consequently, you can eliminate the possibility that it is the source of backpressure itself and focus more on its downstream effects.

Stages with low output queues but high input queues are likely to be sources of backpressure. Hence, Optimization aims to improve this stage's processing power.

SQL scenarios are generally diverse, with complex scenarios sometimes requiring your understanding of the engine to provide optimization suggestions. It is currently ByteDance's goal to improve further these experiences, as well as the path of Metrics and Analysis to continuously reduce the burden of Oncall and provide more accurate optimization recommendations in some scenarios.

Perspectives and effectiveness

There are three flaws in the current execution model, and we have optimized complex queries by promoting a new model. As follows, we will test the new model's ability to resolve our problems.

Calculating the second stage is more complex, and more data is available for the first stage.

Hash Join's right table is a large one.

Multi-table Join for complex Query simulation.

SSB 1T data is used for the data set, and an 8-node cluster is used for the environment.

Case1 - The second stage is computationally complex. There is a relatively heavy computational operator UniqExact, which is the computation of count distinct, causing de-duplication by Hash table. Count different uses this algorithm by default, and when we make complex queries, it reduces the execution time from 8.5 seconds to 2.198 seconds. The agg uniqExact algorithm merge in the second stage was originally done by coordinator single-point merge, but now it can be done by multiple nodes in parallel through the group by the key shuffle. Therefore, shuffle reduces the pressure on coordinators to merge agg.

Case2 - The right table is large. Considering ClickHouse's Optimization of multiple tables is still in the early stages, here is a subquery to push down the filter conditions. Lineorder is a large table, and implementing the complex query pattern optimizes the Query execution time from 17 seconds to 1.7 seconds. As Lineorder is a large table, data can be Shuffled to each Worker node based on the Join key, thereby reducing the pressure of building the right table.

Case3 - Multi-table Join. With a complex query, query execution time is optimized from 8.58 seconds to 4.464 seconds, allowing all the right tables to process and build data simultaneously. It would be better to turn on the runtime filter here than the existing schema.

In fact, the optimizer also improves performance for complex queries significantly. RBO rules, such as the push-down of common predicates and the processing of related subqueries, can enhance the efficiency of SQL's execution. With the optimizer in complex query mode, users do not need to do complex tasks themselves since the optimizer will automatically perform these optimizations.

Furthermore, the choice of the Join implementation can significantly affect performance. If the Join Key distribution can be met, the cost of the left and right table shuffle can be reduced by using Colocate Joins. For multi-table Joins, the order in which the Joins are implemented and how they are applied will significantly impact the execution time more than for two-table Joins. By utilizing the statistical information in such data, a better execution pattern can be obtained by optimizing the CBO.

Using the optimizer, business departments can write any SQL according to their business logic. The engine automatically calculates the relatively optimal SQL plan for them and executes it, thus speeding up the entire process.

In summary, ClickHouse's current execution model performs well in many single-table scenarios; therefore, we mainly optimize for complex scenarios by implementing a multi-Stage model, data transfer between Stages, and applying engineering practices to improve execution and network transmission performance. We hope to lower SQL analysis and tuning threshold by enhancing Metrics and intelligent diagnosis. While the first step has been achieved, there is still much work for future ByteDance events.

The priority is to continue to improve the performance of the execution and Exchange processes. The focus is not on engine optimization in general (such as index optimization or arithmetic optimization) but rather on the Optimization of complex query schemas. A Stage reuse approach may be advantageous in scenarios where SQL results are reused repeatedly, such as in multi-table joins and CTE applications. Previously, we have supported stage reuse; however, it is used in fewer scenarios. We are committed to being more flexible and general in the future.

The Metrics and smart diagnosis will also be enhanced. SQL's high flexibility makes it difficult to diagnose and tune some complex queries without Metrics. In the future, ByteDance will continue to make efforts in this direction with its data platform.

Guest Introduction

Mr. Dong Yifeng, senior R&D engineer for the ByteDance data platform. Dong is responsible for ByteDance's enterprise-class experiment platform team and is committed to building the industry's most advanced and valuable experiment platform, transforming A/B testing into infrastructure for business growth. His contributions include creating Libra, a new middle platform for ByteDance, which serves more than 500 business lines, including Douyin, Tiktok, and Toutiao; and launched products such as DataTester and BytePlus Optimize.